It’s been quite a few months since my first article on AI and I promised a follow up. So here it is! That first article focused on jobs, authenticity and society generally and the impact generative AI could be having.

AI has been around in one form or another for some time. As large language generative AI began to take shape, app developers began adding AI to their apps and programs. Google launched it’s own version of ChatGPT – Bard** (now Gemini); Zoom added AI capability with meeting summaries, real time transcription, and response composition (which seems a bit stupid given you could type your response in the same time as it takes you to type the prompt); Canva with Magic Studio; and Microsoft added Copilot across its suite of programs to save “hours in writing, sourcing, and editing time” (though note their proviso: “Sometimes Copilot will be right, other times usefully wrong”).

This article will focus on business and specifically the use of ChatGPT, and potential fallout. And to be frank, after just a few months I have AI fatigue!

AI Fatigue

In the period between that previous article and this, I’ve observed, taken note, and tried it out. What I’ve noticed is an increasing number of businesses who use AI to generate content. For anyone paying attention, it’s painfully obvious when they do.

I find myself clicking a link to an article on LinkedIn, reading a social media post or opening a newsletter only to roll my eyes and think “AI … again”.

It is very apparent when someone uses AI to generate their content, especially when they don’t edit it to make it sound less like AI and more like themselves.

How I’ve Used AI

I’ve given ChatGPT specifically a fair crack. I had a client ask me to use it and so I did, in this case for blog post SEO – not an area of my expertise and I told them so. But they had been using it, were happy to continue with what it generated, and just wanted me to take over the task. In this case, AI takes the content and generates key phrases, an optimised title and meta description. But even then I tweak it to sound less AI.

I’ve used it to do research. A client who works in a space I cannot understand asked me to research something and I had no idea what search terms to plug into Google. So I gave AI the task he gave me. I then used the results to inform the searches I did on Google. I even plugged the exact AI-generated result into Google so I could get to the page ChatGPT pulled the info from so I could reference it. This also allowed me to make further manual searches on Google.

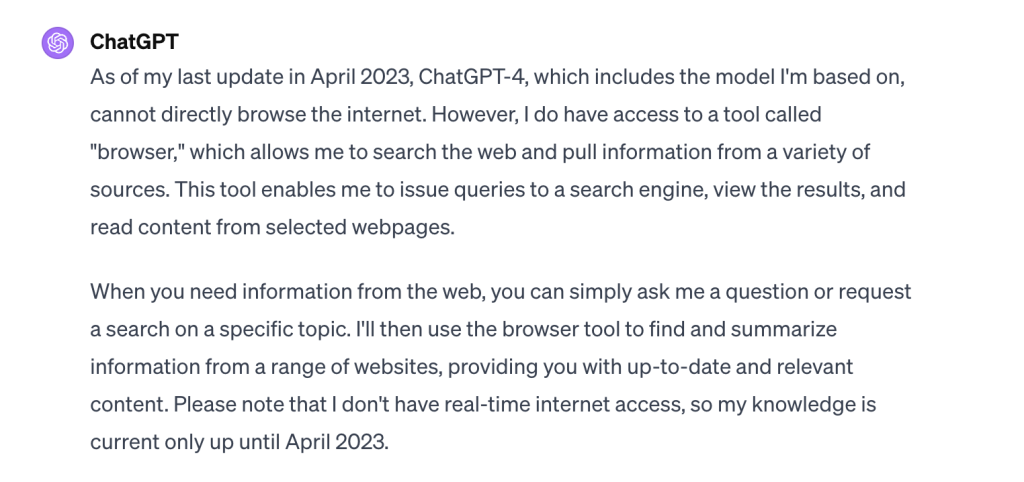

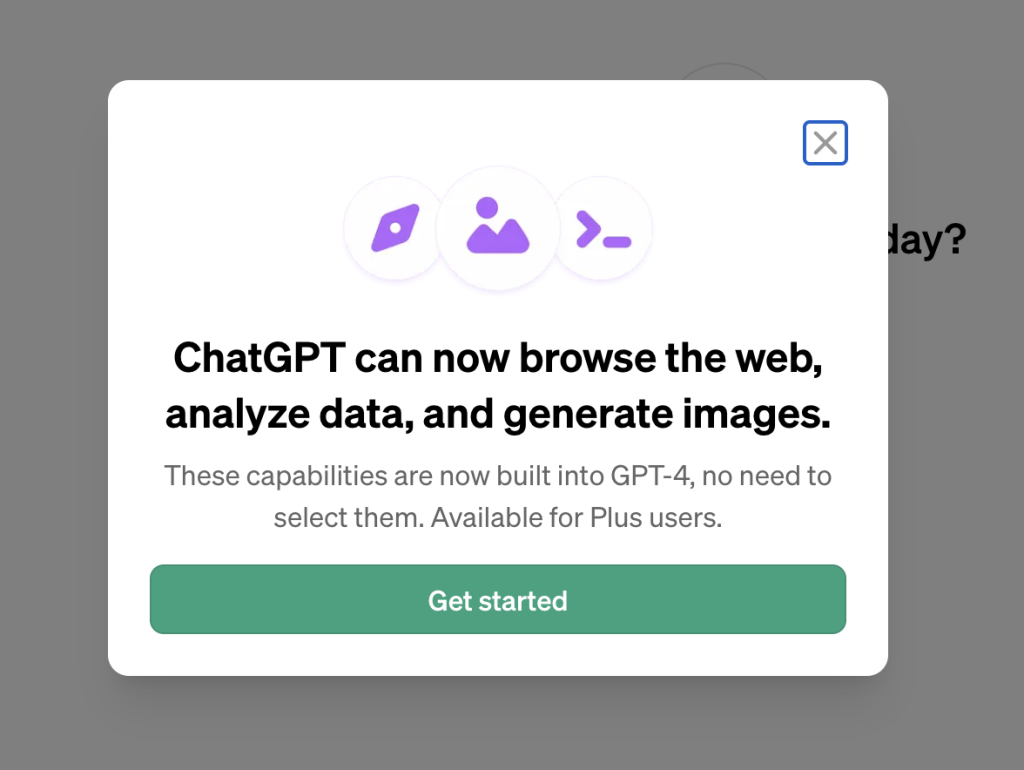

And yes, despite ChatGPT v3.5’s assertions to the contrary, the bot CAN read pages and visit links. Just upgrade to the paid version, ChatGPT 4, plug a url into the box together with your query and miraculously this large language model AI that can’t read web pages, can.

As the screenshots show, it can… it can’t … it can….

Isn’t the definition of ‘browsing the internet’ the ability to ‘read content from selected webpages’?

I’ve also used it to help craft better article titles and social media posts when I can’t think of how to summarise things. On social media, AI-generated content is obvious from the words used (see below) and the overuse of emojis so it’s important to curate it.

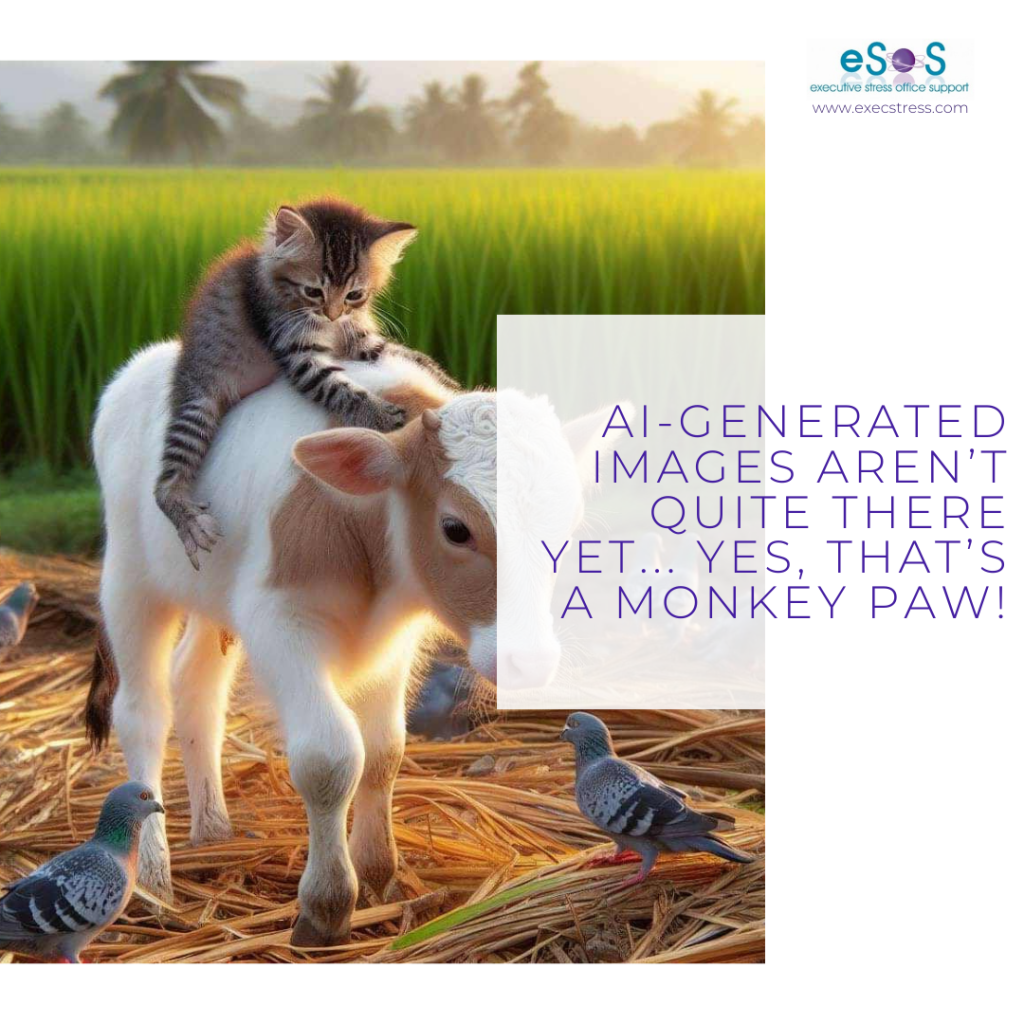

I’ve used GPT-4 to generate a couple of images (which you’ll see on social media posts here and at the podcast) when I couldn’t find exactly what I was looking for online – and man was it quick to do! Which made me very sad for the graphics and arts industries…. Graphic design may well have to pivot fast if it’s to survive as an industry.

I’d love to hear from designers their thoughts on this and any impacts they’ve seen.

AI and Publishing

I’m part of an author’s group on Facebook and a member asked the question “If I have AI write my book can I still put myself down as the author? I came up with the idea and want to ask it to generate the content expanding on the idea.” A few of us commented “probably not” since they didn’t actually write the content; that they would have to ensure there was no breach of someone else’s IP or copyright since AI doesn’t come up with original content but scrapes existing work online (one reason behind the 148-day WGA strike, which ended in September 2023).

This is one reason why insurance companies are now asking copywriters whether they use AI in their work – there is increased risk of liability if copyrighted content is pulled by it.

The member kept asking the question until they got the answer they were after and then turned off comments. That answer: “Yes. Because you had the idea. And copyright is yours as soon as you publish.”

Side note: that’s not quite true. Copyright exists as soon as you create the work – drawing, photo, graphic, written piece – not when it’s published. And changing it a little bit doesn’t make it any less a breach of the original creator’s copyright.

Self-publishing sites like Amazon’s KDP now have a check box asking author’s to confirm whether AI has been used to generate the cover, content or illustrations.

On the other hand, journalists are being asked to use AI like ChatGPT when they are short on content – does this spell the end of objectivity or rigorous investigation as journalists become lazy and print whatever the AI finds but pass it off as appropriately investigated “news”? Perhaps, as with images, there should be a footnote that the content was AI generated…

As this is happening, schools and universities are banning its use.

AI Images

We’re already seeing people ooh and ahh online over AI-generated images. “Isn’t that beautiful!” as they simultaneously overlook the child has six fingers or the cat has a monkey’s foot. AI isn’t quite there yet.

On the other hand I think AI fatigue will extend to graphics too… an AI-generated image may well serve a short-term purpose but can’t elicit the same emotion as the Mona Lisa. It’s obviously fake and lacks the feeling which is apparent in human-generated design.

(I did not create this image. I found it on a Facebook group.)

Deep Fakes and Misuse

When Google launched Bard, scams in India and Vietnam immediately used it for creating ads that tricked business owners into downloading malware by having them click a link on an ad purportedly to download Bard.

I read one blog encouraging the use of ChatGPT to position yourself as more of an expert. Take a URL, plug it into the AI (reminder: yes the paid version can read content from a URL provided) and instruct it to “write an article based on the topics covered in the URL; make the article more comprehensive than the URL provided by adding details that the source URL has missed or didn’t cover. Sound like more of an expert”. In other words don’t become an expert, just make me sound like one! (Note: that article has since been changed to remove the suggestion you use the bot to ‘sound’ like an expert.)

AI currently provides answers pulled from content that exists already, created by humans and uploaded to the web. In a few years’ time this will no longer be the case. As humans use AI more to generate content and upload that to the web, future generations may well be reading works of poetry, prose, fiction – and potentially ‘fact’ – generated by AI.

Elon Musk warned:

“AI is both a positive and a negative: It has great promise and great capability, but with that also comes great danger. With the discovery of nuclear physics, you had nuclear power generation, but also nuclear bombs.”

Elon Musk

Impact on Brand

AI uses the same sort of phraseology: ‘navigate’, ‘dynamic’, ‘dive in’, ‘let’s’, ‘ever-evolving’, ‘landscape’ – just to name a few. So when you robotically (pun intended) post what ChatGPT has spewed out for you without even trying to change the voice and make it sound like you, what’s that doing to your brand?

As I mentioned, I’ve followed a link to an article or blog I’ve seen on LinkedIn that looks interesting only to read the first few lines, see the layout or the title, roll my eyes and say “AI” without reading any further.

Is it right that you expect me to spend 10 or 15 minutes reading your content when you took 20 seconds having AI generate it? Isn’t the implication that you rate your time as more valuable than mine?

Is the content worth as much if it hasn’t come from your expertise and effort? If you’ve not put any effort into its generation other than a 5 second instruction to AI?

Are you dumbing yourself down and devaluing your expertise when you don’t make the effort to put that expertise on paper yourself?

Bottom line: if you can’t be bothered spending your time crafting the content to add value, why should I waste mine reading it?

Lyn Prowse-Bishop

I can understand people doing this when they’re writing on a topic about which they have little or no expertise. But isn’t that just another type of “deep fake”? Why would you write on topics about which you have no expertise?

Ghost in the Machine

I think my conclusion from this observation and research, as well as application, is that AI has a use (especially in say agriculture and medical applications***, or for those who don’t feel they have the time to put in the effort to create content, email responses, or designs themselves, and can fall back on the “efficiency” aspect) but it should never replace human creation. If it is used to create – especially in the business context – that creation needs to be curated by a human at the very least.

It’s interesting to note the language used around AI: “It’s amazing what it knows!” What it ‘knows’ is what humans have created before it.

It is important to remind ourselves that AI is a machine, not a human – and it is dangerous to view something as human that has no human emotion, or the ability to think critically.

The developers play on our human side by creating an AI that ‘speaks’ to us in very human terms and responds to our input as if it is a human – which is why I found myself thanking it for answers! This is deliberate.

Seth Godin said it this way:

“We know exactly what the code base is, and yet within minutes, most normal humans are happily chatting away, bringing the very emotions to the computer that we’d bring to another person. We rarely do this with elevators or door handles, but once a device gets much more complicated than that, we start to imagine the ghost inside the machine.”

Seth Godin

Who is Training Who?

I believe in the not too distant future a business’s point of difference may well be that they don’t use AI for content creation.

It certainly means due diligence will become even more important when you are looking at business partnerships with freelancers, as ‘fake it till you make it’ gets a steroid injection. Should we believe even less of what we read on websites and About pages?

In our quest for greater efficiency we have to ask at what cost? Creativity? Critical thinking? Logic? Brand? Authenticity? Respect? Reputation?

As more and more AI-generated content in the form of blogs, articles, newsletters, books, and images flood the internet, it raises the question who is the robot? Who exactly is training who?

Footnotes:

** Google CEO Sundar Pichai reportedly told employees in 2023 that even though Google has similar capabilities to ChatGPT, the company has yet to commit to giving out AI-generated search responses because of the risk of providing inaccurate information. Perhaps this position has changed since the upgrade to Gemini. I’ve not personally trialled Gemini – I did trial Bard and it was pretty useless.

***January 2024 (ironically, considering the quote above?) Elon Musk’s Neuralink reported its first human patient receiving a brain implant representing his goal of symbiosis between the human brain and AI through neural-interface technology.

Resources:

CNN Business – How Google’s long period of online dominance could end

The Hill – Google suing scammers for fake Bard AI downloading malware

Fortune – Elon Musk lashes out at the ChatGPT sensation he helped to create

Seth’s Blog – The Ghost in the Machine

Business Insider – The story of Neuralink